How to Leverage llms.txt for Your Website SEO

As artificial intelligence transforms how users interact with the web, search is no longer limited to blue links and traditional rankings.

Platforms like Google’s Search Generative Experience (SGE), ChatGPT, and Perplexity.ai now rely on Large Language Models (LLMs) to deliver fast, conversational answers sourced from across the internet.

This shift benefits users, but raises important questions for website owners.

- Are AI tools using your content without permission?

- Can you control which AI bots visit your site?

- How does this affect your SEO?

That’s where llms.txt comes in.

What is llms.txt?

llms.txt is a simple text that guides how AI bots interact with your content.

It functions similarly to robots.txt, but instead of guiding search engine crawlers, it gives AI crawlers, like GPTBot, ClaudeBot, and Google-Extended, specific instructions on what they can or cannot access and use from your site.

Whether you want to increase your visibility in AI-powered search tools or protect premium content from being scraped for model training, implementing llms.txt puts you in control of how your site interacts with this new era of AI-driven discovery.

Why Does It Matter?

- Control your content: Decide what AI tools can use to train their models.

- Boost SEO visibility: Allow trusted bots to improve your appearance in AI-generated answers.

- Protect premium or private info: Block unwanted AI crawlers from scraping your site.

Why llms.txt Matters for SEO and Content Strategy

- Control Over AI Answers: By specifying which content AI models can access, you can prevent the misuse of your content in chatbots while allowing trusted LLMs to utilize it.

- Visibility in AI Search: Blocking all LLMs may result in your content being excluded from AI-generated answers, reducing your site’s visibility in AI-powered search results.

- Content Protection: Implementing llms.txt helps prevent unauthorized scraping of premium or private content by AI crawlers.

It works like a robots.txt file but is made for language model bots.

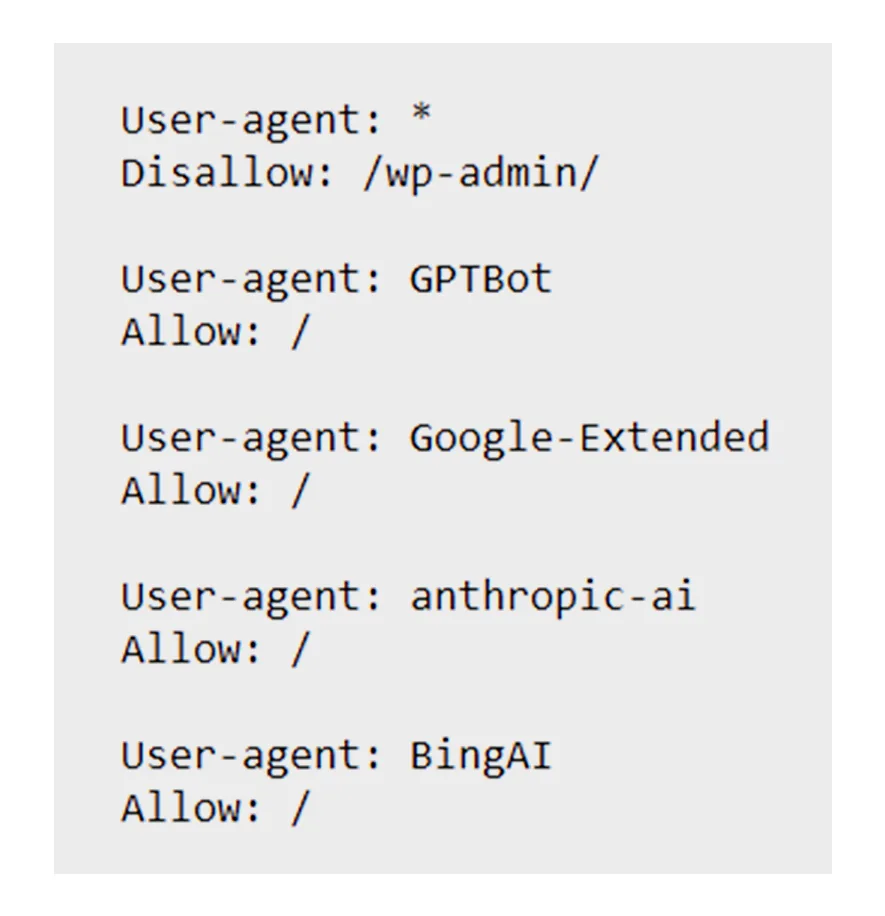

Basic Example:

User-agent: Google-Extended

Allow: /

User-agent: GPTBot

Disallow: /premium-content/

Allow: /blog/

User-agent: *

Disallow: /What the Rules Mean:

- User-agent: The name of the AI bot you want to control.

- Allow: Which pages or folders the bot can read.

- Disallow: Which pages or folders the bot cannot read.

- Use comments (#): This is to explain why a rule exists.

Why llms.txt Must Be in Your Root Directory

Placing it in a subfolder means LLMs won’t see it, and may assume full access.

AI bots only check this exact location ie. your root directory. If it’s in a subfolder or mistyped (like llm.txt or LLM.TXT), it will be ignored, leaving your content exposed.

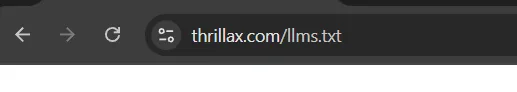

Pro Tip

Use Google’s URL Inspection Tool to verify llms.txt is live in your root directory. For non-Google AI bots, check server logs or use an LLM validator tool. Or you can check the llms.txt by searching “yourdomain.com/llms.txt”

llms.txt vs. robots.txt: What’s the Difference?

| Feature | robots.txt | llms.txt |

|---|---|---|

| Target | Search engine crawlers (e.g., Googlebot) | AI models (e.g., GPTBot, ClaudeBot) |

| SEO Impact | Organic rankings | AI-generated answers (SGE, Bard) |

| Control Focus | Crawling/indexing | Content usage in AI training |

| Example |  |

|

Critical Note:

“Robots.txt rules don’t apply to LLMs, you need both files for full control.”

Real-World Use Case

A news site might:

- Allow Googlebot in robots.txt for SEO.

- Block GPTBot in llms.txt to avoid AI training on paid articles.

Need help with crawler control? Compare Adobe’s robots.txt rules with llms.txt.

Step-by-Step: How to Set Up llms.txt

-

Create the File

Use a text editor (e.g., Notepad++) to create the llms.txt file.

-

Add Your Rules

Add the instructions for which AI bots can or can’t access different parts of your website.

-

Upload to Your Root Directory

Use FTP, cPanel, or your CMS to place it at

https://yourdomain.com/llms.txt. -

Validate the Syntax

Visit llmstxt.org to check for errors.

-

Monitor AI Bot Activity

Use server logs or platforms like Cloudflare to track visits from:

- GPTBot (OpenAI)

- ClaudeBot (Anthropic)

- Google-Extended (Google AI)

Best Practices for AI Search Visibility

- Allow Trusted Bots

Let in GPTBot, ClaudeBot, and Google-Extended if you want to appear in their search results.

- Block Sensitive or Premium Content

/admin/, /paywall/, /client-portal/ are common protected paths.

- Sync with robots.txt

Make sure both files align. Avoid contradictions.

- Keep It Updated

Update your llms.txt file regularly as your site and the AI ecosystem evolve.

- Use Meta Tags for Extra Control

Use on individual pages to prevent AI indexing.

This tag asks AI programs not to read or learn from this page. It’s like a “please don’t use my content for AI” sign.

Common Pitfalls to Avoid

- Blocking All AI Bots by Default

This may hurt your site’s appearance in AI search results.

- Typos in Syntax

A small mistake can break the file. Always validate it.

- Forgetting to Update It

If you launch new content or restructure your site, make sure llms.txt reflects those changes.

- Not Documenting Your Rules

Use comments to explain why each rule exists, this helps maintain consistency across your team.

The Future of SEO with LLMs

AI-first search is already here. In the coming years, it will only grow more influential.

What to Expect:

- More AI crawlers will start respecting llms.txt.

- New rules (such as AI-snippet: allow) may be introduced for finer control.

- AI optimization will become as important as traditional SEO.

Next-Gen Optimization:

- Sites that manage both human SEO and AI crawler visibility will dominate the evolving SERP landscape.

- Websites that adapt early will have a strong edge in future search visibility.

- Ensure your llms.txt supports your search market fit to dominate both AI and organic search results.

Conclusion and Next Steps

Recap:

- The llms.txt file tells AI bots what parts of your website they can see and use.

- It helps keep private or special content safe from being copied by AI.

- It also helps trusted AI bots find and show your website in their answers.

What You Should Do Next:

- Create your llms.txt file

- Ensure the llms.txt file is located in the root directory of your website

- Validate it using llmstxt.org

- Monitor and maintain it regularly

If you’re serious about staying visible and protected in the age of AI search, implementing llms.txt is a smart and timely move.

Frequently Asked Questions About llms.txt

robots.txt controls how traditional search engine bots (like Googlebot) crawl and index your site.

llms.txt is specifically for AI crawlers, such as GPTBot or ClaudeBot, and controls how your content is used for training language models or appearing in AI-generated search results.

Yes. These files serve different purposes. Use robots.txt to manage traditional search engine behavior and llms.txt to manage AI crawler behavior. For full control, both files should be used in coordination.

Blocking AI crawlers won’t affect your traditional Google rankings. But if you block them, your content might not appear in AI-powered answers or chatbots.

The file must be placed in the root directory of your website:

https://yourdomain.com/llms.txt

If it’s in a subfolder or named incorrectly, AI crawlers will ignore it.

Allow trusted AI crawlers like Google-Extended, GPTBot, or ClaudeBot if you want your content featured in AI search results. Block any unknown, unauthorized, or suspicious crawlers to protect private or premium content.

No, it’s not a legal requirement. However, it’s quickly becoming a best practice for site owners who want to control how their content is used by AI systems. Many AI companies follow the rules in the file, but it’s your choice to have one or not.

Yes. You can use # to add comments in the file. For example:

# Allow AI bots to access blog content

User-agent: GPTBot

Allow: /blog/

This helps you and your team document your decisions.

Update it whenever:

- You launch new sections of your site

- You want to adjust access rules

- New LLM crawlers emerge

Regular reviews (monthly or quarterly) are a good habit to ensure full coverage.