Why AI Search Ignores Your SEO Content (And How to Fix the Prompt Gap)

The Search Behavior Split

Search is no longer one behavior.

Users search Google with keywords, but they ask AI tools full questions with context. And most content today is optimized for only one of those behaviors, making it invisible to the other.

This creates a growing disconnect between:

- How people express intent, and

- How content is written and structured

If your content ranks on Google but never gets cited by ChatGPT, Claude, or Perplexity, you are experiencing what we will call the prompt gap.

In this article, we will break down:

- How search behavior differs between Google and AI tools

- Why traditional SEO content fails conversational prompts

- Real examples of the prompt gap

- Practical strategies to bridge it

- Content formats that perform well in both environments

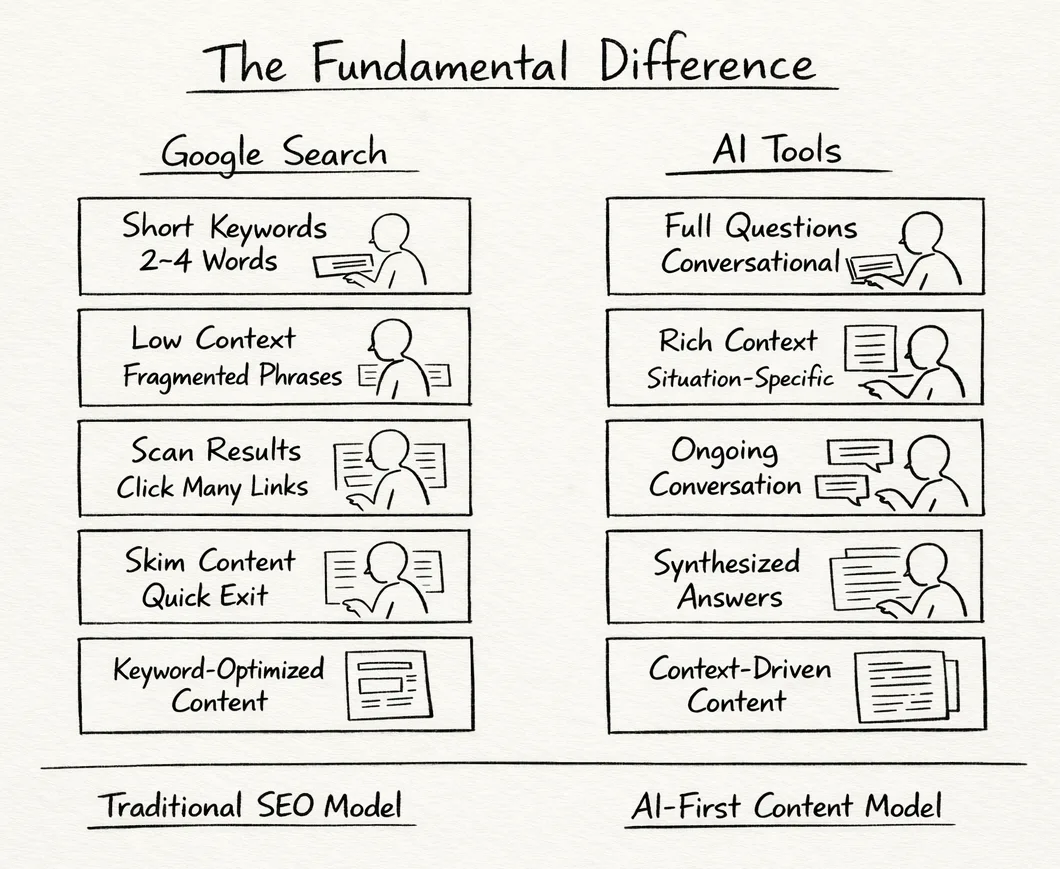

The Fundamental Difference

How People Use Google

Google search behavior is keyword-driven.

Typical Google searches:

- “email marketing best practices”

- “reduce customer churn.”

- “project management software small business”

- “SEO timeline results.”

Characteristics:

- Short (typically 2-4 words)

- Keyword-focused

- Fragmented phrases

- Little to no context

- Written for search engine interpretation, not human conversation

User behavior:

- Type minimal keywords

- Scan the results page

- Click multiple links

- Skim content quickly

- Bounce back if the answer isn’t immediate

How content is optimized for Google:

- Target specific keywords

- Use keywords in titles, H1s, and headers

- Optimize for featured snippets

- Keep paragraphs short and scannable

- Monitor keyword density

This model worked well for years.

But it’s no longer the only way people search.

How People Use AI Tools

AI tools are used conversationally.

Typical ChatGPT / Claude / Perplexity prompts:

- “What are the most effective email marketing strategies for a B2B SaaS company with a small list under 1,000 subscribers?”

- “How can I reduce customer churn when most cancellations happen after three months?”

- “I need project management software for a remote team of 12 people, currently using spreadsheets and Slack. What should we consider?”

- “How long does SEO take to show results for a new website in a competitive industry?”

Characteristics:

- Long (15-50 words is common)

- Natural, conversational language

- Rich with context

- Situation-specific

- Framed as questions

- Often refined through follow-ups

User behavior:

- Ask complete questions

- Provide context upfront

- Have an ongoing conversation

- Rely on AI to synthesize answers

- Rarely visit multiple sources

Content AI tools prefer:

- Natural language (not keyword stuffing)

- Comprehensive explanations

- Contextual relevance

- Answers to why and how, not just what

- Nuance, caveats, and edge cases

This is where most SEO content breaks.

This shift from fragmented keywords to full, conversational intent is part of a broader transition already reshaping search behavior, which we explore in more depth in From Keywords to Conversations.

The Prompt Gap: Real Examples

Example 1: Email Marketing

Google search: “email marketing tips.”

AI prompt: “I run a small e-commerce store selling handmade jewelry. My list has about 800 subscribers, but my open rates are only 12%. What should I do to improve engagement without sounding too salesy?”

The gap:

Most content optimized for “email marketing tips” includes:

- A generic list of 10 tips

- No industry specificity

- No acknowledgment of list size

- No reference to a 12% open rate

- No balance between value and selling

What the AI prompt actually needs:

- E-commerce-specific tactics

- Advice for small lists

- Open-rate benchmarks and diagnosis

- Strategies that reduce sales pressure

- Clear, actionable next steps

Who wins?

Content that addresses the full context, not just the keyword.

Example 2: SaaS Metrics

Google search:

- “SaaS metrics”

- “important SaaS metrics to track”

AI prompt:

“We are a B2B SaaS company at $500K ARR with 45 customers and planning to raise a seed round. What metrics will investors care about, and what benchmarks should we hit?”

The gap:

Keyword-optimized content typically:

- Lists 15-20 metrics

- Explains each definition

- Treats all metrics as equally important

- Ignores the company stage

What the AI prompt needs:

- Seed-stage-specific metrics

- Investor-centric framing

- Benchmarks for $500K ARR

- Prioritization (top 5-7 metrics)

- Context on what “good” looks like

Example 3: SEO Services

Google search:

“SEO services for small business”

AI prompt:

“I own a plumbing company in Austin with three employees. We rely on referrals but want more online leads. Is SEO worth it, or should we focus on Google Ads? Budget is $1,000/month.”

The gap:

Typical keyword content covers:

- What SEO services include

- Generic benefits

- Pricing ranges

- How to choose an agency

What the AI prompt needs:

- Local service business advice

- SEO vs. Google Ads trade-offs

- Realistic outcomes for $1,000/month

- Timelines and expectations

- Whether SEO even makes sense here

Some SEO practitioners have echoed this sentiment in community discussions, with one Reddit conversation characterizing traditional SEO as nearly irrelevant for AI-driven answers, arguing that generative engines evaluate content by entirely different criteria, such as brand mentions and contextual coverage rather than backlinks or DA.

SEO vs GEO – I may have cracked a way to rank on Ai

byu/Narrow-Resident-3396 incontent_marketing

Why This Gap Matters

Impact on Visibility

Traditional SEO still works for Google.

If you rank #1 for “email marketing tips”:

- You capture keyword-based traffic

- You are visible to traditional searchers

But in AI-mediated search:

- Keyword-only content often isn’t cited

- AI favors context-rich answers

- Your content becomes invisible to conversational discovery

This disconnect is already surfacing in the SEO community; several practitioners have shared cases where pages rank well on Google but receive zero visibility in ChatGPT, Gemini, or AI Overviews, highlighting that keyword rankings alone don’t guarantee inclusion in AI-generated answers.

Why does my website have ZERO AI visibility? (ChatGPT, Gemini, AI Overviews all show 0)

byu/Capable_Quality_6790 inSEO

The trend:

- 2023: ~85% Google, 15% AI

- 2024: ~75% Google, 25% AI

- 2025: ~65% Google, 35% AI

- 2026: Continued shift toward AI-assisted research

Your content must serve both.

Impact on Content Performance

Keyword-only structure:

Title: “10 Email Marketing Best Practices”

- Generic list

- Broad advice

- No context

- Shallow explanations

AI performance:

- Occasionally cited for generic queries

- Rarely selected for real-world prompts

Conversational structure:

Title: “How to Improve Email Open Rates for Small E-commerce Businesses”

Includes:

- Industry context

- Benchmarks by list size

- Diagnosis before solutions

- Specific implementation steps

- Realistic timelines

AI performance:

- Frequently cited

- Matches conversational prompts

- More useful for decision-making

Many marketers are now openly acknowledging this blind spot. One Reddit conversation describes how teams see clear brand lift from AI mentions but struggle to track it using analytics, rankings, or attribution models designed for traditional search.

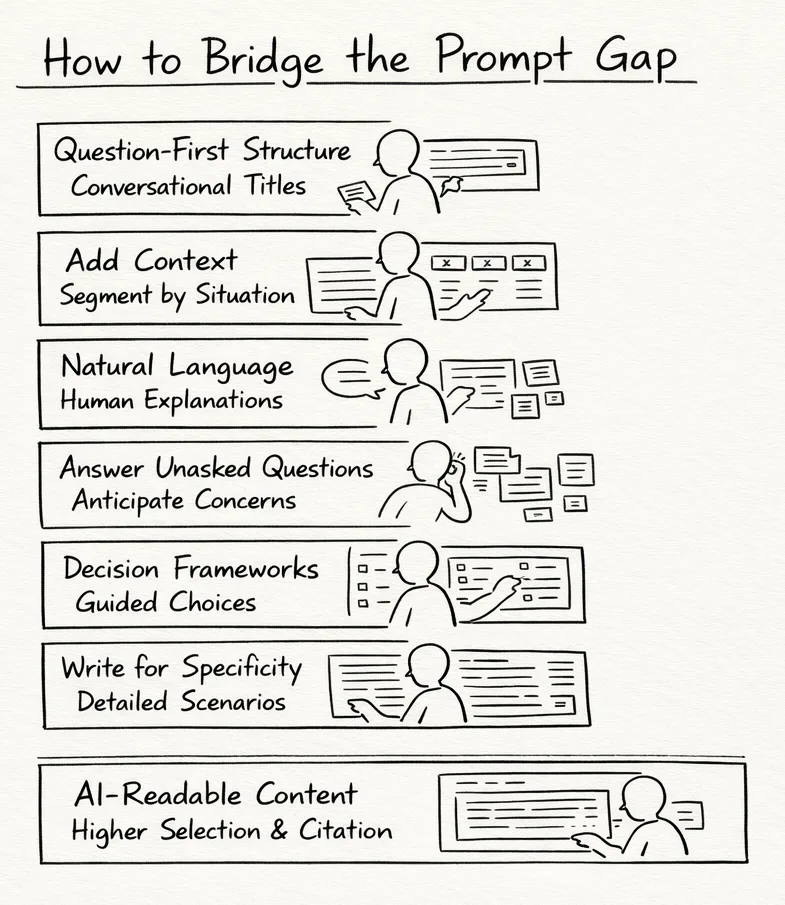

How to Bridge the Prompt Gap

Strategy 1: Question-First Content Structure

Keyword-focused titles such as “Customer Retention Strategies” or “How to Reduce Churn” are optimized for search engines, but they rarely reflect how users frame questions in AI tools.

Conversational titles, on the other hand, mirror real prompts. Examples include “Why Do SaaS Customers Cancel After 3 Months?” or “How Can Small Subscription Businesses Reduce Churn on a Budget?” These titles signal context, intent, and situational awareness, which makes them far more likely to be selected and cited by AI systems.

This approach works because it aligns content structure with how users actually ask questions, rather than how keywords are traditionally formatted for rankings.

This is also why content organized around real questions consistently outperforms list-based pages in AI answers, a pattern we break down further in Why Structured, Question-Driven Content Performs Better in AI Search.

Strategy 2: Add Context Throughout Content

Instead of universal advice, segment by situation.

Example:

- Pricing tier

- Business model

- Company size

- Customer type

This allows AI to extract the right section for the user’s context.

Strategy 3: Embrace Natural Language

Keyword stuffing often sounds robotic and unnatural, which reduces clarity for both readers and AI systems. Natural language, by contrast, reflects how people actually explain problems and solutions, making content easier for AI tools to interpret and reuse.

AI systems understand human explanations far better than repetitive keyword patterns, and readers respond the same way.

Strategy 4: Answer the Unasked Questions

Users rarely ask just one thing.

Good content anticipates:

- Cost beyond pricing pages

- Implementation effort

- Adoption challenges

- Risk of choosing wrong

- Migration concerns

This reduces follow-up questions, something AI tools reward.

Strategy 5: Include Decision Frameworks

Lists don’t help people decide.

Frameworks do.

Decision matrices, step-by-step filters, and scenarios allow:

- Self-diagnosis

- Personalized outcomes

- Better AI synthesis

Strategy 6: Write for Specificity

Generic advice competes with thousands of pages.

Specific advice:

- Signals expertise

- Matches detailed prompts

- Gets cited more often

“Email Deliverability for E-commerce Stores Sending 50K+ Emails Monthly” will always beat “Email Deliverability Tips.”

Content Formats That Work for Both

- Comprehensive guides structured around questions and answers

- Scenario-based content that reflects real user situations

- Problem, diagnosis, and solution-driven content

These formats satisfy keyword intent, serve conversational prompts, and provide the depth AI tools prefer.

Testing for the Prompt Gap

- Search the target keyword on Google and check if the page ranks

- Ask AI tools realistic prompts on the same topic and see if it gets cited

- Compare the results

If a page ranks but is not cited, a prompt gap likely exists. If it is cited but does not rank, the issue is usually the SEO structure.

Your Content Isn’t Broken, It’s Just Answering the Wrong Questions

Search behavior is splitting.

Some of your audience still type short keywords into Google.

Others are asking AI tools long, context-rich questions and expecting synthesized answers.

If your content ranks but still feels invisible, you are not imagining it.

Most teams aren’t bad at SEO. They are just optimizing for how people searched five years ago, not how they ask questions today. The prompt gap doesn’t show up in rankings, traffic, or attribution reports. It shows up quietly, when AI tools answer the exact questions your buyers are asking, but pull context from someone else’s content.

The good news is that you don’t need to choose between Google and AI.

Content that works for conversational AI usually ranks better on Google, too, because it mirrors how real people think, evaluate options, and make decisions.

If you want to pressure-test:

- Whether your existing content matches real AI prompts

- Which pages are keyword-optimized but context-poor

- Where conversational structure would actually change visibility (and where it wouldn’t)

You can share a bit of context with us here: https://tally.so/r/3EGEd4

No rewrites promised.

No content packages.

No “AI SEO” buzzwords.

Just a short form to understand what you are publishing, who it’s for, and whether bridging the prompt gap would materially change how your content gets discovered.

If it’s a fit, we will say so.

If it’s not, you will still walk away knowing why your content is, or isn’t, showing up in AI answers.

Common Questions About the Prompt Gap

The prompt gap is the mismatch between how content is written (keyword-focused) and how users ask AI tools questions (context-rich, conversational). Content can rank well on Google and still be invisible to AI answers if it doesn’t match how prompts are structured.

No. In most cases, it improves them. Content that uses natural language, answers real questions, and provides context tends to perform better for long-tail and intent-driven searches on Google as well.

Because ranking algorithms reward relevance signals and backlinks, while AI systems prioritize clarity, explanatory depth, and contextual usefulness. A page can rank highly yet still be unusable as a direct answer.

Not exactly. The prompt gap is about how users express intent, not about gaming AI systems. It focuses on writing content that mirrors real questions and decision-making contexts, which AI tools can then use naturally.

Test it both ways:

- Search the target keyword on Google

- Ask an AI tool a realistic, detailed question related to the same topic

If your page ranks but isn’t cited, or is outperformed by more specific content, you likely have a prompt gap.

No. Many teams see results by updating:

- Titles and headers to be question-based

- Introductions to acknowledge context

- Sections that add scenarios, constraints, or decision frameworks

Small structural changes often make a big difference.

No. It’s especially useful for:

- High-intent buyers

- Complex B2B decisions

- Products or services with multiple use cases

These users already think in questions, not keywords.

Unlikely in the short term. What’s happening instead is a split: Google remains strong for discovery and navigation, while AI tools dominate research, comparison, and decision-making.

Trying to “add AI keywords” instead of restructuring content around real questions, context, and decision logic. The goal isn’t to sound AI-friendly, it’s to sound human.